Learning-Based Robotics Perception for Intelligent Manufacturing

We develop iLSPR, a learning-based scene point-cloud registration framework for precision industrial scene reconstruction under the practical constraints of manufacturing—high accuracy requirements but limited data compared to general scene reconstruction.

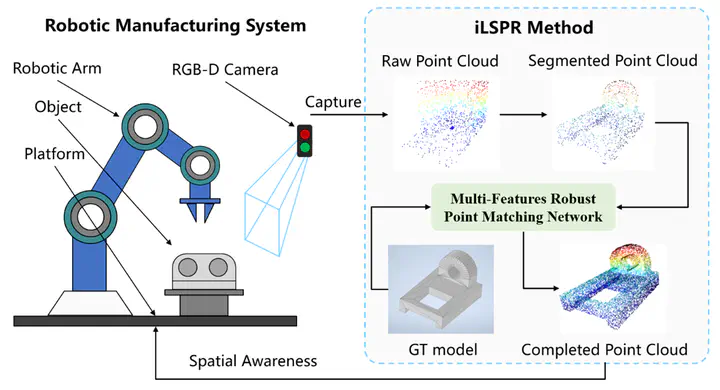

iLSPR builds the reconstruction pipeline around point clouds and a digital model library of target workpieces: an RGB-D sensor captures the scene, a predefined bounding box segments the target object point cloud, and iLSPR selects the object’s ground-truth model from the library and registers it back into the scene for accurate pose estimation and reconstruction.

The key module, MF-RPMN, fuses raw geometric inputs with deep features and integrates robust point matching to reduce data dependence and improve robustness to noise and varying point densities; GPDG generates synthetic part point clouds from geometric primitives for efficient pretraining.

To benchmark performance, we introduce the ISOPR dataset in NVIDIA Isaac Sim with 2000 partial point clouds from 67 workpiece models, and report superior registration accuracy (e.g., MAE-t 0.004 and MAE-r 0.297, with reported improvements over RPMNet).

We further validate iLSPR in a real-world prototype and show it can accurately select and register ground-truth point clouds into real scenes, enabling reliable industrial reconstruction for downstream tasks.